Pod Delete

Introduction¶

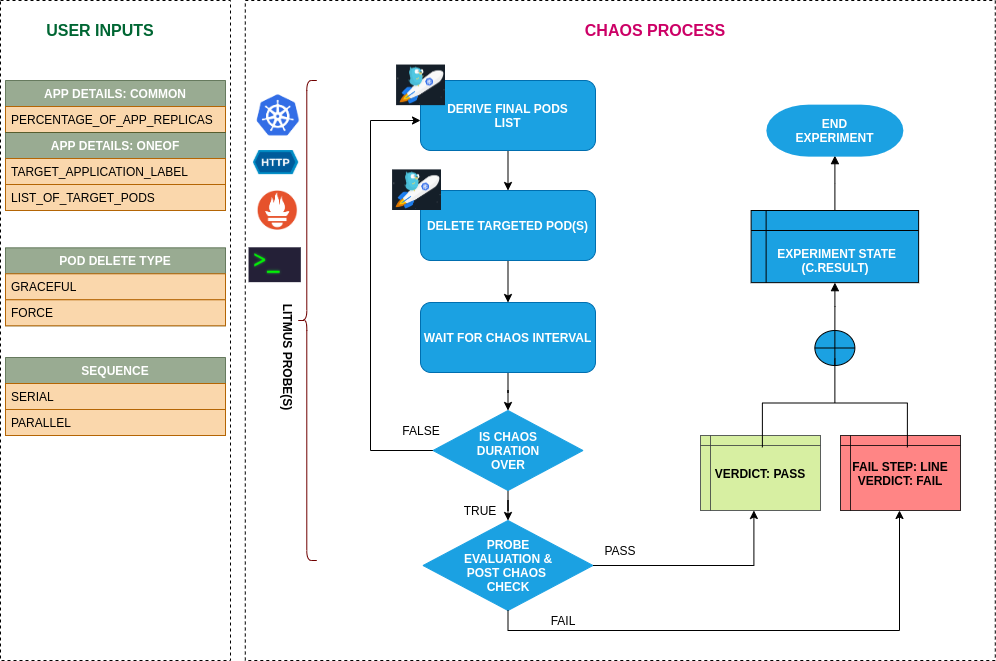

- It Causes (forced/graceful) pod failure of specific/random replicas of an application resources.

- It tests deployment sanity (replica availability & uninterrupted service) and recovery workflow of the application

Scenario: Deletes kubernetes pod

Uses¶

View the uses of the experiment

In the distributed system like kubernetes it is very likely that your application replicas may not be sufficient to manage the traffic (indicated by SLIs) when some of the replicas are unavailable due to any failure (can be system or application) the application needs to meet the SLO(service level objectives) for this, we need to make sure that the applications have minimum number of available replicas. One of the common application failures is when the pressure on other replicas increases then to how the horizontal pod autoscaler scales based on observed resource utilization and also how much PV mount takes time upon rescheduling. The other important aspects to test are the MTTR for the application replica, re-elections of leader or follower like in kafka application the selection of broker leader, validating minimum quorum to run the application for example in applications like percona, resync/redistribution of data.

This experiment helps to reproduce such a scenario with forced/graceful pod failure on specific or random replicas of an application resource and checks the deployment sanity (replica availability & uninterrupted service) and recovery workflow of the application.

Prerequisites¶

Verify the prerequisites

- Ensure that Kubernetes Version > 1.16

- Ensure that the Litmus Chaos Operator is running by executing

kubectl get podsin operator namespace (typically,litmus).If not, install from here - Ensure that the

pod-deleteexperiment resource is available in the cluster by executingkubectl get chaosexperimentsin the desired namespace. If not, install from here

Default Validations¶

View the default validations

The application pods should be in running state before and after chaos injection.

Minimal RBAC configuration example (optional)¶

NOTE

If you are using this experiment as part of a litmus workflow scheduled constructed & executed from chaos-center, then you may be making use of the litmus-admin RBAC, which is pre installed in the cluster as part of the agent setup.

View the Minimal RBAC permissions

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: pod-delete-sa

namespace: default

labels:

name: pod-delete-sa

app.kubernetes.io/part-of: litmus

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: pod-delete-sa

namespace: default

labels:

name: pod-delete-sa

app.kubernetes.io/part-of: litmus

rules:

# Create and monitor the experiment & helper pods

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update", "deletecollection"]

# Performs CRUD operations on the events inside chaosengine and chaosresult

- apiGroups: [""]

resources: ["events"]

verbs: ["create","get","list","patch","update"]

# Fetch configmaps details and mount it to the experiment pod (if specified)

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get","list",]

# Track and get the runner, experiment, and helper pods log

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

# for creating and managing to execute comands inside target container

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["get","list","create"]

# deriving the parent/owner details of the pod(if parent is anyof {deployment, statefulset, daemonsets})

- apiGroups: ["apps"]

resources: ["deployments","statefulsets","replicasets", "daemonsets"]

verbs: ["list","get"]

# deriving the parent/owner details of the pod(if parent is deploymentConfig)

- apiGroups: ["apps.openshift.io"]

resources: ["deploymentconfigs"]

verbs: ["list","get"]

# deriving the parent/owner details of the pod(if parent is deploymentConfig)

- apiGroups: [""]

resources: ["replicationcontrollers"]

verbs: ["get","list"]

# deriving the parent/owner details of the pod(if parent is argo-rollouts)

- apiGroups: ["argoproj.io"]

resources: ["rollouts"]

verbs: ["list","get"]

# for configuring and monitor the experiment job by the chaos-runner pod

- apiGroups: ["batch"]

resources: ["jobs"]

verbs: ["create","list","get","delete","deletecollection"]

# for creation, status polling and deletion of litmus chaos resources used within a chaos workflow

- apiGroups: ["litmuschaos.io"]

resources: ["chaosengines","chaosexperiments","chaosresults"]

verbs: ["create","list","get","patch","update","delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: pod-delete-sa

namespace: default

labels:

name: pod-delete-sa

app.kubernetes.io/part-of: litmus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: pod-delete-sa

subjects:

- kind: ServiceAccount

name: pod-delete-sa

namespace: default

Experiment tunables¶

check the experiment tunables

Optional Fields

| Variables | Description | Notes |

|---|---|---|

| TOTAL_CHAOS_DURATION | The time duration for chaos insertion (in sec) | Defaults to 15s, NOTE: Overall run duration of the experiment may exceed the TOTAL_CHAOS_DURATION by a few min |

| CHAOS_INTERVAL | Time interval b/w two successive pod failures (in sec) | Defaults to 5s |

| RANDOMNESS | Introduces randomness to pod deletions with a minimum period defined by CHAOS_INTERVAL | It supports true or false. Default value: false |

| FORCE | Application Pod deletion mode. false indicates graceful deletion with default termination period of 30s. true indicates an immediate forceful deletion with 0s grace period |

Default to true, With terminationGracePeriodSeconds=0 |

| TARGET_PODS | Comma separated list of application pod name subjected to pod delete chaos | If not provided, it will select target pods randomly based on provided appLabels |

| PODS_AFFECTED_PERC | The Percentage of total pods to target | Defaults to 0 (corresponds to 1 replica), provide numeric value only |

| RAMP_TIME | Period to wait before and after injection of chaos in sec | |

| SEQUENCE | It defines sequence of chaos execution for multiple target pods | Default value: parallel. Supported: serial, parallel |

Experiment Examples¶

Common and Pod specific tunables¶

Refer the common attributes and Pod specific tunable to tune the common tunables for all experiments and pod specific tunables.

Force Delete¶

The targeted pod can be deleted forcefully or gracefully. It can be tuned with the FORCE env. It will delete the pod forcefully if FORCE is provided as true and it will delete the pod gracefully if FORCE is provided as false.

Use the following example to tune this:

# tune the deletion of target pods forcefully or gracefully

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: pod-delete-sa

experiments:

- name: pod-delete

spec:

components:

env:

# provided as true for the force deletion of pod

# supports true and false value

- name: FORCE

value: 'true'

- name: TOTAL_CHAOS_DURATION

value: '60'

Multiple Iterations Of Chaos¶

The multiple iterations of chaos can be tuned via setting CHAOS_INTERVAL ENV. Which defines the delay between each iteration of chaos.

Use the following example to tune this:

# defines delay between each successive iteration of the chaos

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: pod-delete-sa

experiments:

- name: pod-delete

spec:

components:

env:

# delay between each iteration of chaos

- name: CHAOS_INTERVAL

value: '15'

# time duration for the chaos execution

- name: TOTAL_CHAOS_DURATION

value: '60'

Random Interval¶

The randomness in the chaos interval can be enabled via setting RANDOMNESS ENV to true. It supports boolean values. The default value is false.

The chaos interval can be tuned via CHAOS_INTERVAL ENV.

- If

CHAOS_INTERVALis set in the form ofl-ri.e,5-10then it will select a random interval between l & r. - If

CHAOS_INTERVALis set in the form ofvaluei.e,10then it will select a random interval between 0 & value.

Use the following example to tune this:

# contains random chaos interval with lower and upper bound of range i.e [l,r]

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: pod-delete-sa

experiments:

- name: pod-delete

spec:

components:

env:

# randomness enables iterations at random time interval

# it supports true and false value

- name: RANDOMNESS

value: 'true'

- name: TOTAL_CHAOS_DURATION

value: '60'

# it will select a random interval within this range

# if only one value is provided then it will select a random interval within 0-CHAOS_INTERVAL range

- name: CHAOS_INTERVAL

value: '5-10'